Table of contents

This guide assumes you manage your infrastructure with Terraform.

Here is an example IRSA implementation using Terraform and kubectl.

Background

Let's say you want to allow your EKS-hosted app to access an AWS service. You have a couple of options to do so, depending on the application:

Using AWS IAM credentials (key/secret) injected into your pods as Kubernetes secrets or via environment variables.

Have your pods use the AWS IAM EC2 instance profile (EKS nodes).

But how can you achieve the same in a secure and scalable way? IRSA is your friend.

What is IRSA?

IRSA stands for IAM Roles for Service Accounts. It is the method of linking an AWS IAM role with a Kubernetes service account attached to a pod. This method offers some advantages:

You specify the Kubernetes service account (and namespace) that has access and trust to assume the corresponding IAM role. No other service account will be able to do so.

You can easily track the access events in AWS CloudTrail for a specific combination of IAM role and service account.

You can make the access as specific and granular as you need via IAM (role) policies.

Every EKS cluster natively hosts a public OIDC discovery (unique) endpoint for your workloads to authenticate and access other AWS services, via AWS STS.

Ok cool, what is OIDC then?

OIDC is an authentication protocol based on OAuth 2.0. It is designed to offer a handover layer to allow authenticating users/services without having to maintain credentials.

Here you can find more info about OIDC.

Kubernetes supports various authentication strategies, and OpenID Connect is one of them.

What does the workflow look like?

Involved parties

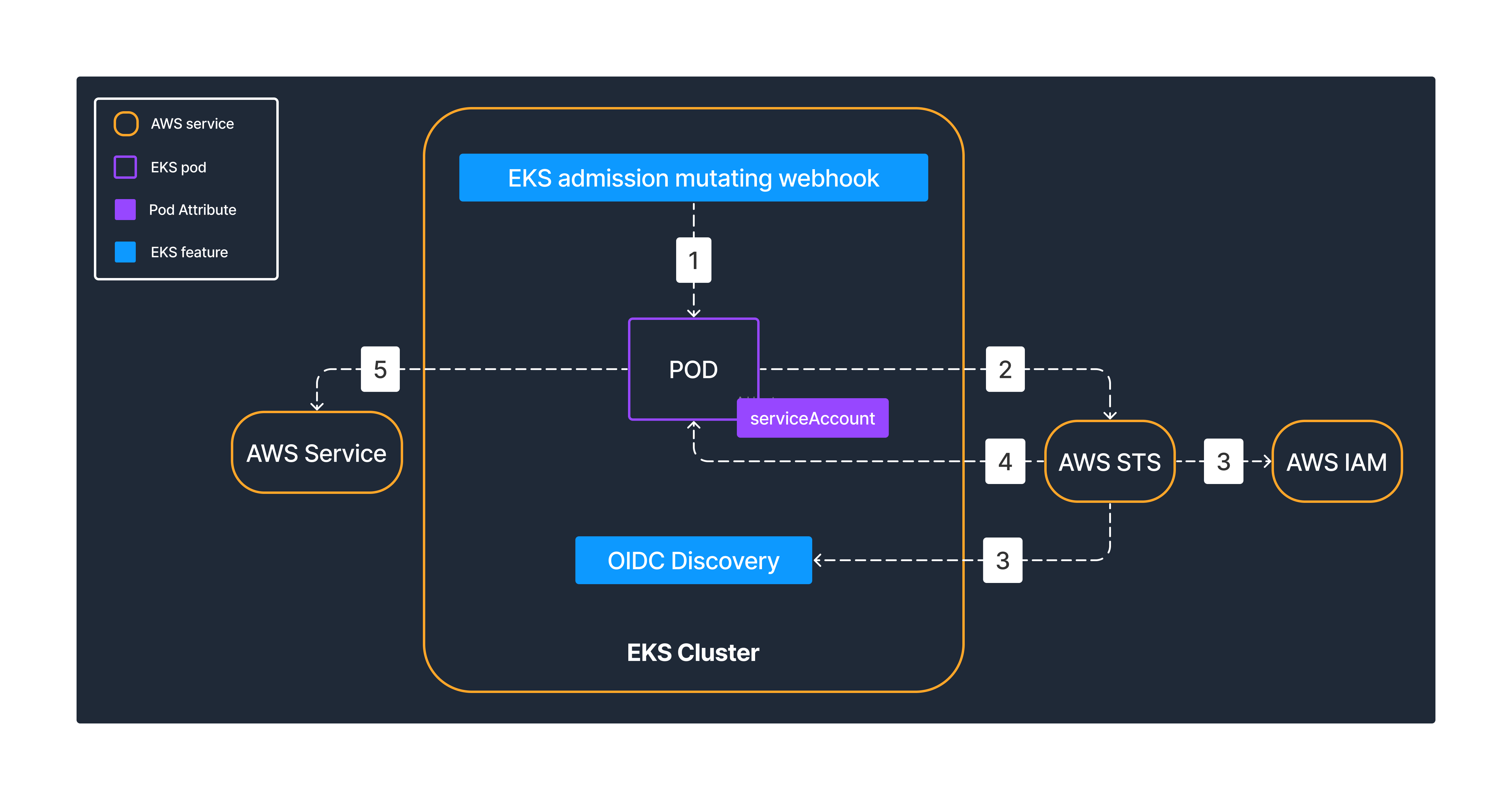

The authentication is done using three main components:

SDK (client app) running in a pod.

OIDC discovery endpoint (hosted by EKS cluster).

(Kubernetes) service account.

When a service account is created with an IRSA annotation, a JWT token is generated as a Kubernetes secret.

- This annotation is the linkage with the IAM role it needs to assume:

annotations: eks.amazonaws.com/role-arn: arn:aws:iam::<aws_account_id>:role/your-role

- This annotation is the linkage with the IAM role it needs to assume:

When that service account is attached to a pod, it will automatically inject two new environment variables:

AWS_ROLE_ARN- the IAM role ARN.AWS_WEB_IDENTITY_TOKEN_FILE- the path to the JWT token.- and mount the JWT token as a volume to that pod.

The process

When a Kubernetes service account (with the IAM role ARN annotation) is attached to a pod, the control plane EKS mutating admission webhook injects two env variables:

AWS_ROLE_ARNandAWS_WEB_IDENTITY_TOKEN_FILEand mounts a volume containing an OIDC-generated JWT token.When the client SDK (running in a pod with the service account attached) performs the AWS API call (e.g.

eks:ListClusters), it under the hood sends aAssumeRoleWithWebIdentityrequest to AWS STS service, including both the JWT token and the role ARN it wants to assume to do the AWS API call (e.g.eks:ListClusters) from the service account annotation.AWS STS validates the IAM role trust (assume) policy condition, which contains both the OIDC discovery ID and a combination of the Kubernetes namespace and service account name where the original API call is coming from.

If all checks out, it sends back a set of temporary AWS IAM role credentials to the client SDK.

Now the client SDK is using temporary credentials for an AWS IAM role, which allows access to a set of AWS resources defined in its policy.

Implementation

You will need

What we will create

IAM side.

role.

assume policy.

identity-based policy.

Kubernetes side.

namespace.

service account.

job.

Create an IAM role + policies

Add the following Terraform code and run terraform apply. It will create an IAM role with two policies attached.

locals {

namespace = "irsa-sample-ns" # this is the namespace we will create within the EKS cluster.

serviceaccount = "irsa-test" # this will be the service account the job will use.

}

data "aws_eks_cluster" "this" { # get EKS cluster attributes to use later on.

name = "your-eks-cluster-name"

}

data "aws_iam_policy_document" "assume-policy" { # create an assume policy for STS + a combination of namespace + service account.

statement {

actions = ["sts:AssumeRoleWithWebIdentity"]

condition {

test = "StringEquals"

variable = "${replace(data.aws_eks_cluster.this.identity[0].oidc[0].issuer, "https://", "")}:sub"

values = [

join(":",["system:serviceaccount",local.namespace,local.serviceaccount])

]

}

condition {

test = "StringEquals"

variable = "${replace(data.aws_eks_cluster.this.identity[0].oidc[0].issuer, "https://", "")}:aud"

values = ["sts.amazonaws.com"]

}

principals {

type = "Federated"

identifiers = [data.aws_eks_cluster.this.identity[0].oidc[0].issuer]

}

}

}

resource "aws_iam_role" "irsa-role" { # create the IAM role and attach both assume and inline identity based policies.

name = "irsa-role"

path = "/"

assume_role_policy = data.aws_iam_policy_document.assume-policy.json

inline_policy {

name = "eks-list"

policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = ["eks:ListClusters"]

Effect = "Allow"

Resource = "*"

}]

})

}

}

As described, the assume policy will contain two conditions:

data.aws_eks_cluster.this.identity[0].oidc[0].issuer:aud: sts.amazonaws.comallowing AWS STS interaction.data.aws_eks_cluster.this.identity[0].oidc[0].issuer:sub: system:serviceaccount:irsa-namespace:irsa-samatching with the correct namespace + service account name.

And a federated principal pointing to the EKS OIDC provider. Those three combined are the logic involved to facilitate the final assume action.

The second policy (identity-based) is to allow the role to perform a eks:ListClusters API call.

Create a test namespace

kubectl create ns irsa-sample-ns

Create a kubernetes service account

cat <<EoF> serviceAccount-eks.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: irsa-test

namespace: irsa-sample-ns

EoF

kubectl apply -f serviceAccount-eks.yaml

Create a Kubernetes job

cat <<EoF> job-eks.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: eks-iam-test-eks

namespace: irsa-sample-ns

spec:

template:

metadata:

labels:

app: eks-iam-test-eks

spec:

serviceAccountName: irsa-test

containers:

- name: eks-iam-test

image: amazon/aws-cli:latest

args: ["eks", "list-clusters"]

restartPolicy: Never

backoffLimit: 0

EoF

kubectl apply -f job-eks.yaml

This job will create a pod using the official aws-cli image, in irsa-sample-ns namespace, with an app=eks-iam-test-eks label.

It will then run $ aws eks list-clusters and finish. In this first iteration, the job should fail as there is no explicit policy or permission allowing this job (pod) to run any AWS API calls.

Let's check its logs to see the error:

$ kubectl logs -n irsa-sample-ns -l app=eks-iam-test-eks

An error occurred (AccessDeniedException) when calling the ListClusters operation: User: arn:aws:sts::<your_aws_account_id>:assumed-role/<your_eks_node_group_id>/<ec2_instance_id> is not authorized to perform: eks:ListClusters on resource: arn:aws:eks:<your_aws_account_region>:<your_aws_account_id>:cluster/*

I have redacted the AWS account, region, EKS node group and EC2 instance IDs.

The aws-cli session running in the pod (SDK, in this case, Python botocore) does not have a linked IAM role giving permissions for the requested API call. It is using the irsa-test service account we created in this namespace, which does not have any IRSA annotations, hence it is using the EKS node (instance profile) IAM role.

Now let's annotate the irsa-test service account we created, with the IAM role that has the permission(s) the job needs.

kubectl annotate sa -n irsa-sample-ns irsa-test eks.amazonaws.com/role-arn=arn:aws:iam::<your_aws_account_id>:role/irsa-role

This annotation is the linkage between the service account and the IAM role we created before.

Now, let's delete the job:

kubectl delete -f job-eks.yaml

And redeploy:

cat <<EoF> job-eks.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: eks-iam-test-eks

namespace: irsa-sample-ns

spec:

template:

metadata:

labels:

app: eks-iam-test-eks

spec:

serviceAccountName: irsa-test

containers:

- name: eks-iam-test

image: amazon/aws-cli:latest

args: ["eks", "list-clusters"]

restartPolicy: Never

backoffLimit: 0

EoF

kubectl apply -f job-eks.yaml

Let's check its logs to see what happened:

$ kubectl logs -n irsa-sample-ns -l app=eks-iam-test-eks

{

"clusters": [

"<your_eks_cluster_name>"

]

}

Now that we annotated the irsa-test service account, the aws-cli running in the pod successfully assumed the irsa-role IAM Role that has the proper permissions to perform the required API call eks:ListClusters.

(optional) See related events in CloudTrail

If you have CloudTrail enabled, you can also see these in Event history > search for User Name = system:serviceaccount:irsa-sample-ns:irsa-test - you will find an entry for this service account performing the required sts:AssumeRoleWithWebIdentity API call.

And searching for Event name = ListClusters you will find:

A first entry for the EKS node (EC2 instance ID) trying to do

eks:ListClustersviaarn:aws:sts::<your_aws_account_id>:assumed-role/<your_eks_node_group_id>/<ec2_instance_id>with anAccessDeniederror code.And then, a second entry for the aws-cli session (botocore) being able to successfully do

eks:ListClustersby assumingarn:aws:sts::<your_aws_account_id>:assumed-role/irsa-role/botocore-session-<id>.

Cleanup

Delete the job

kubectl delete -f job-eks.yamlDelete the service account

kubectl delete -f serviceAccount-eks.yamlDelete the namespace

kubectl delete ns irsa-sample-nsDelete the IAM role by removing the Terraform code and running

terraform apply.

Conclusion

IRSA helps secure your workloads by allowing them to use temporary credentials instead of using static IAM keys created for users or roles.

Also if you were to use the default EKS node IAM role (EC2 instance profile), then you would have to include every single service permission for every eventual app (pod) running on that specific EKS node - each application running on EKS might need to access a different AWS service, ergo different permissions. This will make accesses difficult to track down and it will get harder to maintain.

If you do not want to worry about mixed services policies or maintaining IAM credentials, consider implementing IRSA.

Thank you for stopping by! Do you know other ways to do this? Please let me know in the comments, I always like to learn how to do things differently.